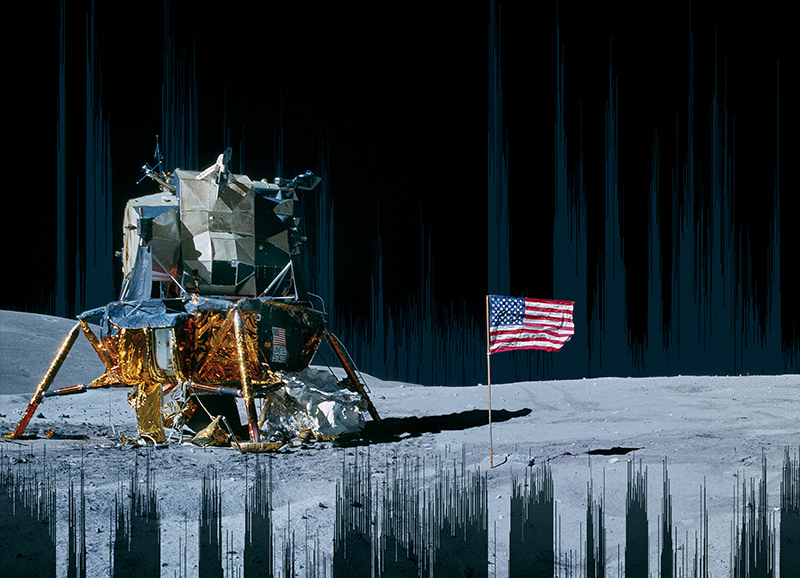

Recovering Audio from the Apollo Moon Missions

The Apollo program was pivotal in the history of space exploration and scientific and engineering accomplishments, but until recently, most people have heard only soundbites from the missions. Thanks to the work of Dr. John Hansen, professor of electrical and computer engineering, and voice recognition researchers at UT Dallas, the general public now can listen to the conversation between the engineers, astronauts and backroom support staff that made up the Apollo missions.

“Almost all logistics support during missions were done through audio,” Hansen said. “That’s a real challenge when hundreds of NASA personnel all needed to work together while not necessarily seeing each other.”

The audio archives, including much of Neil Armstrong’s famous moon landing remarks, were stored on outdated analog tapes until Hansen and his team made them accessible.

Hansen’s team at the Center for Robust Speech Systems (CRSS) in the Erik Jonsson School of Engineering and Computer Science (the Jonsson School) received a National Science Foundation grant in 2012 to convert the extensive tape archive into Explore Apollo, a public education and research website. The project, in collaboration with researchers at the University of Maryland, included audio from all of Apollo 11 and most of the Apollo 13, Apollo 1 and Gemini missions.

Hansen also currently serves as associate dean for research in the Jonsson School, a professor in the School of Behavioral and Brain Sciences, and Distinguished Chair in Telecommunications. He is also president of the International Speech Communication Association (ISCA).

A Giant Leap for Speech Technology

The communications for Apollo missions comprised more than 200, 14-hour analog tapes, each with 30 tracks of audio. The tracks capture all communications between mission control specialist, astronauts and backroom support teams, but reflect challenges due to garbled speech, technical interference and overlapping air-to-ground loops. Imagine Apple’s Siri trying to transcribe discussions with as many as 35 different people simultaneously using NASA’s unique lexicon, some speaking with regional Texas accents. Transcribing and reconstructing the audio archive required specialized advances in speech processing and language technology.

The project, led by Hansen and his research scientist Dr. Abhijeet Sangwan, included a team of doctoral students who digitized the archives, then developed algorithms to reorganize and analyze the audio. The algorithms are described in the November 2017 issue of IEEE/ACM Transactions on Audio, Speech and Language Processing.

Seven undergraduate UTDesign® teams supervised by CRSS helped create the Explore Apollo website. The University’s Science and Engineering Education Center (SEEC) also evaluated the Explore Apollo site.

Refining Retro Equipment

For decades, NASA played such tape reels using a 1960s device called SoundScriber, located at the NASA Johnson Space Center in Houston.

The device could read one track at a time, and the user had to manually rotate a handle to move the tape read head from one track to another. Hansen estimated it would take at least 170 years to digitize just the Apollo 11 mission audio.

“We couldn’t use that system, so we had to develop a new solution,” Hansen said. “We designed our own 30-track read head and built a parallel solution to capture all 30 tracks at once. This is the only solution that exists worldwide.”

The new read head cut the digitization process to four months. Tuan Nguyen, a biomedical engineering senior with CRSS, spent a semester in Houston working on the project.

Dr. John H.L. Hansen Talks Apollo 11 from UT Dallas on Vimeo.

Revealing the Heroes Behind the Heroes

After digitizing the files, researchers created software that could detect speech activity, including tracking what each person said and when, a process called diarization. Researchers also tracked speaker characteristics to analyze how individuals reacted in tense situations, and they uncovered how backroom support teams worked at NASA during the missions.

“This is not something you learn in a class,” said Chengzhu Yu PhD’17. Yu began his doctoral program at the start of the project and now works as a research scientist focusing on speech recognition at Tencent Holdings Ltd.’s artificial intelligence research center in Seattle.

The team has demonstrated the interactive website at the Perot Museum of Nature and Science in Dallas. For Hansen, the project has highlighted the work of many people involved in the lunar missions.

“When we think of Apollo, we often think about the astronauts who deserve our admiration. However, the heroes behind the heroes represent the countless engineers, computer scientists and specialists who ensured the success of the Apollo program,” Hansen said. “I hope our students today might be inspired from such Apollo team efforts to collaborate and commit their experiences in diverse STEM fields to address tomorrow’s complex challenges.”

UT Dallas Marks Apollo 50th

Posts

You May Also Like