Making More Efficient AI

Materials Science and Engineering Researchers Recognized

Dr. Jiyoung Kim’s laboratory specializes in novel memory device materials, fabrication and applications, and atomic layer deposition applications

Dr. Jiyoung Kim, professor of materials science and engineering, and researchers in his lab were recently recognized for developing a high-performance selector and for publishing research on emerging non‑volatile memory (NVM). New hardware designed for artificial intelligence (AI) receives considerable attention for its potential, particularly among technologies leading to significantly enhanced power efficiency. The developments of a more advanced selector and new memory components are required to create hardware components that operate at low power, a must for enabling widespread use of AI technology.

Dr. Jiyoung Kim’s laboratory housed at the Erik Jonsson School of Engineering and Computer Science is making strides toward solving this big problem. Harrison Kim PhD’20 won best student presentation prize at the Non‑Volatile Memory Technology Symposium in October 2019 for his work on developing a selector of crossbar arrays for potential AI applications, titled “Volatile Switching Characteristics Per Doping Criteria for Understanding Improved Reliability on Ag-based Selector.” The Non-Volatile Memory Technology Symposium is a highly selective, invitation-only event for academic and industry researchers working on conventional non-volatile memory. Researchers from Sandia National Laboratories, Intel Corp., Micron® Technology, Applied Materials®, STMicroelectronics, IBM and more attended the event.

Developing emerging NVM is equally important as developing a high-performance selector. Principal investigator Kim and his group contributed research toward articles that were selected three times consecutively as an Editor’s Pick and featured article in Applied Physics Letters. Dr. Yong Chan Jung and Dr. Heber Hernandez-Arriaga, as well as Jaidah Mohan and Akshay Sahota from Jiyoung Kim’s group were co-researchers for the selector and NVM work.

Applied Physics Letters is widely considered a premier publication for materials science researchers and features a strict publication process. The group’s research addressed techniques to solve common problems with creating commercial ferroelectric random-access memory (FRAM), an alternative type of random-access memory that uses about 99 percent less electricity than conventional dynamic random-access memory (DRAM).

Ferroelectrics can help in performing analog-type NVM operations that can act as a hardware component for deep learning. Such ferroelectric materials were fabricated at 400 degrees Celsius, a lower than normal temperature for this process. Mohan, who has been studying ferroelectric materials for his PhD, said, “These low temperatures can help in easy integration to the back end of the line, or the second part of integrated circuit fabrication.”

Jiyoung Kim works closely with other UT Dallas materials science and engineering researchers, including Dr. Luigi Colombo and Dr. Chadwin Young. His team’s work is highly esteemed within his field, attracting researchers across the globe.

“Without hesitation, I joined Dr. Kim’s laboratory after completing my master’s degree in South Korea,” Harrison Kim said. “The work is comprehensive and multidisciplinary. I was also interested in hands-on processing, working on the fundamentals of electronics and devices.”

Harrison Kim, who previously studied atomic layer deposition, a technique used for creating nanoscale electronics, said that NVM is as important as a reliable selector in nanoelectronics. After delivering a virtual dissertation defense during the coronavirus pandemic lockdown, Harrison Kim completed his PhD in the spring of 2020 and accepted a new role at Intel. There, Kim is putting to work his UT Dallas lab experience of trying to enable more efficient AI technology.

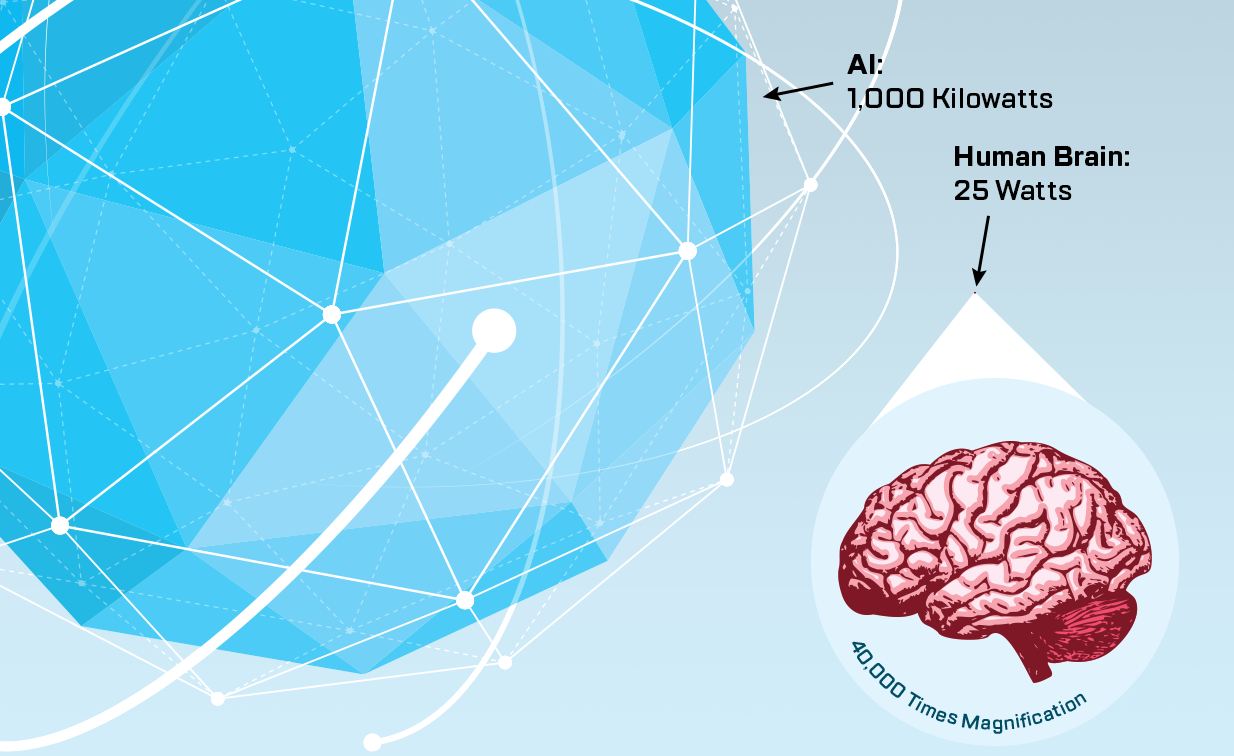

AI vs. Human

To explain the need for substantially more efficient nanoelectronics, Harrison Kim PhD’20 gave one popular example. While the computer program AlphaGo famously beat world champion Lee Sedol at a five-game match of the strategy game Go in 2016, it also consumed a substantial amount of energy. In 2017, AlphaZero was an improvement, but still required as many as 1,000 kilowatts compared to around just 25 watts for the human brain. For autonomous vehicles and other AI innovations to become a part of daily life, reduced power dissipation is essential.